I vibe-coded an AI photo organizer for Lychee

I mentioned recently my use of the Lychee program for hosting my photos/pictures website. I recently uploaded a backlog of a few hundred photos to the Unsorted section of that site, figuring I could organize them later. Then I realized: I could use AI to help organize them! So I used Claude Code to write lychee-ai-organizer:

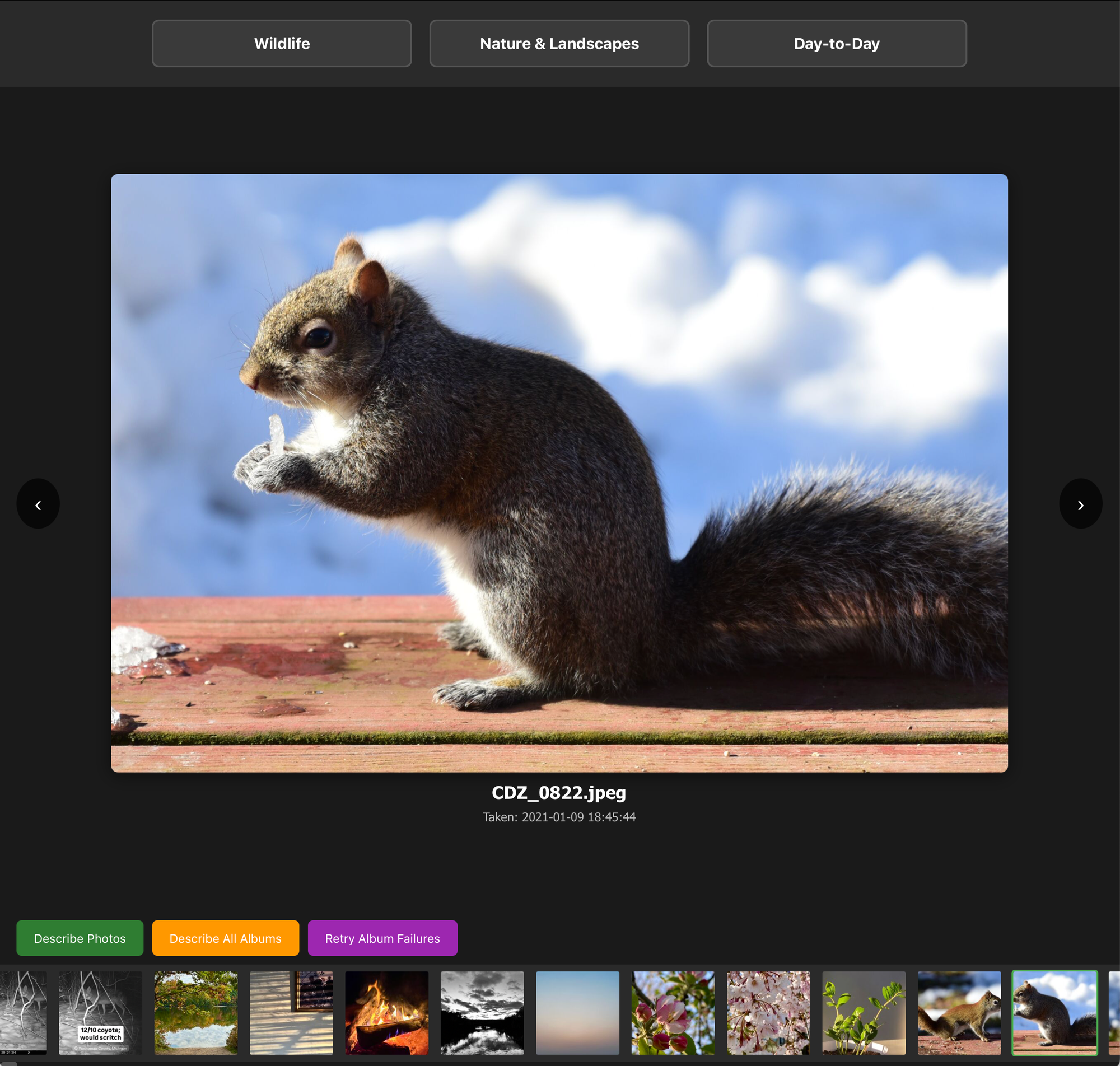

lychee-ai-organizer examines each unsorted photo and suggests albums in which it may belong:

I'll be the first to point out: this is a hack. It reads from and writes to Lychee's MySQL database directly, partly because I know nothing about Lychee's REST API and partly because I figured I might need to make some queries that wouldn't be possible (efficiently or at all) with the API. So right now this is effectively a disposable program, though it would be nice to improve it such that it supports a PostgreSQL backend too.

And the UI could be much more usable.

Claude Code

That said, I wrote this in just a few hours using Claude Code, and once again I am (grudgingly) impressed at how well Claude Code works. I only made a few minor edits to the program manually; my work was focused on requirements, validation, high-level architecture, and data gathering.

This would absolutely have taken me longer to write by hand. So much longer, in fact, that I never would have bothered to write it without Claude Code. This means it joins gallerygen and macos-screenlock-mqtt in the list of "useful stuff I wouldn't have found time to write by hand."

I've historically been an LLM skeptic, and for many use cases I still am, but results speak for themselves.

How it works

The term "vibe coding" minimizes much of the actual work involved in programming. I still had to figure out how this program should work, write specifications and manage Claude Code, and iterate on that spec as needed.

The core of the program relies on an Ollama instance hosting two models: a vision model used to describe photos, and another model used to synthesize descriptions and make album organization suggestions.

Phase 1: Photo analysis

First, we go through every photo in Lychee and ask a vision-capable LLM for a description of the photo. This prompt looks like:

Analyze this photo and provide a concise description in 2 sentences. Focus on:

- Subject matter and composition

- Photographic style and unique characteristics

- Overall mood and atmosphereIn my usage of this program, I've had good results with qwen2.5vl:3b. This runs on my home server, which has a 22GB-modded 2080Ti for running this sort of thing. (It's also solar-powered, for the record!)

Phase 2: Album analysis

Then, we ask the synthesis model for a description of every top-level Lychee photo album, based on the descriptions of every photo in it:

Based on the following photo descriptions from an album, create a concise summary that captures the essence of this photo collection:

Photo descriptions:

%s

Date range: %s to %s

Provide a cohesive summary that synthesizes the common themes, subjects, and mood across these photos.I'm using qwen3:8b for this, with good results.

A problem arose, though: for albums with more than 30~50 photos, my LLM input was truncated because I exceeded qwen3's context window. So, on albums with more than 30 photos, lychee-ai-organizer first asks the model to summarize each batch of 30 photos. (If there are more than 30 batches, it then asks the model to summarize the summaries, and so on.) Then, instead of providing each individual photo's description when asking for an album description, we provide these summaries. This seems to work well enough. (I think this is similar to context compaction in Claude Code?)

Phase 3: Photo suggestions

Finally, to get the album suggestions for a given photo, we provide the synthesis model with the photo's description and a list of albums (with descriptions), and ask it to pick the top three albums for the photo:

Given this photo description:

%s

Photo date: %s

And these available albums:

%s

Analyze this photo and suggest the top 3 most appropriate albums for it. Consider:

- Thematic similarity (subject matter, content type)

- Contextual relevance (setting, event type, activity)

- Other clues (album title vs. photo subject, album date vs. photo date)

You must respond with valid JSON in exactly this format:

{

"album_ids": ["AlbumID1", "AlbumID2", "AlbumID3"]

}

Rules:

- Use only Album IDs that appear in the available albums list above

- Return exactly 3 Album IDs in order of best match first

- Respond with only the JSON object, no other text

- The "album_ids" field must contain an array of stringsThat's all! Everything else about the program is an uninteresting, Go-backend React app.