LLM Q&A Technique: Context Priming

Even the best LLMs, equipped with web search & research tools, can inevitably hallucinate when asked questions about obscure topics — in today's case, how to accomplish various tasks using the NATS CLI.

I combat this by using a technique called "context priming." (I didn't invent this term, and I'm hoping that this is a correct use of it; I'm sure someone will let me know otherwise!)

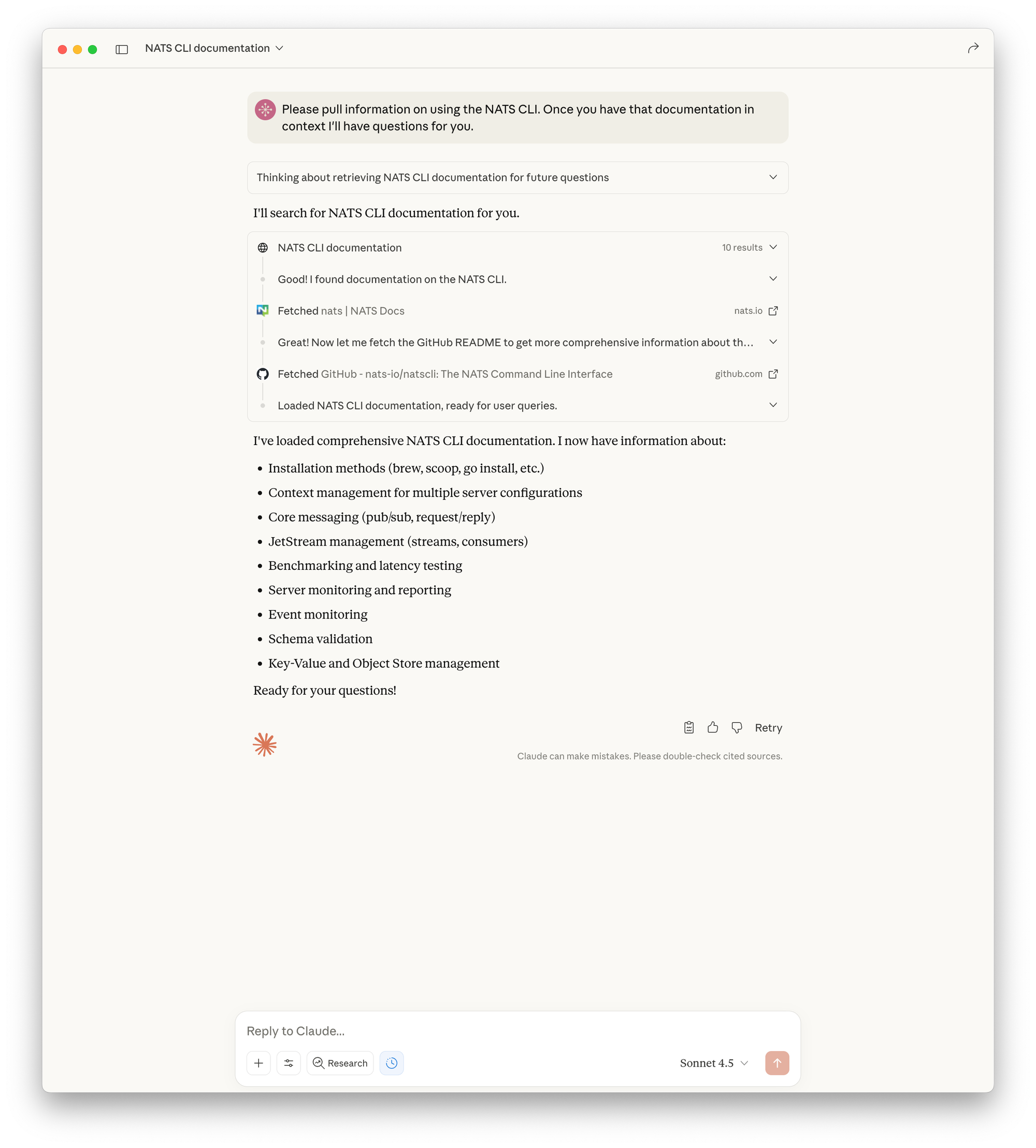

All this means is that, at the beginning of our conversation, I'll ask Claude to find reference materials about the topic I plan to ask about. This looks like the following screenshot:

In this example, Claude used its built-in web search tool; in other situations (or when I'm using a different LLM frontend) my SearXNG or Tavily MCP tools might be used instead.

Regardless, this means the conversation starts out with accurate documentation in the context, and that informs all responses in the conversation. In this case, I was able to ask Claude a bunch of in-depth questions about accomplishing certain tasks in the NATS CLI, and its answers were accurate and without hallucination.